The previous article, which was based on chat your documents, we converted our documents to embeddings by calling OpenAI API. We then used the FAISS search Index to store the embeddings for similarity search and faster retrieval. But what exactly are embeddings? Let's find out.

What are embeddings?

Embeddings, in the context of machine learning and natural language processing (NLP), refer to a mathematical representation of data, typically words, phrases, or entire documents, in a continuous vector space.

Embeddings enable the conversion of discrete, symbolic data, such as text, into continuous numerical vectors, which can be more easily processed by machine learning models.

Why embeddings are useful?

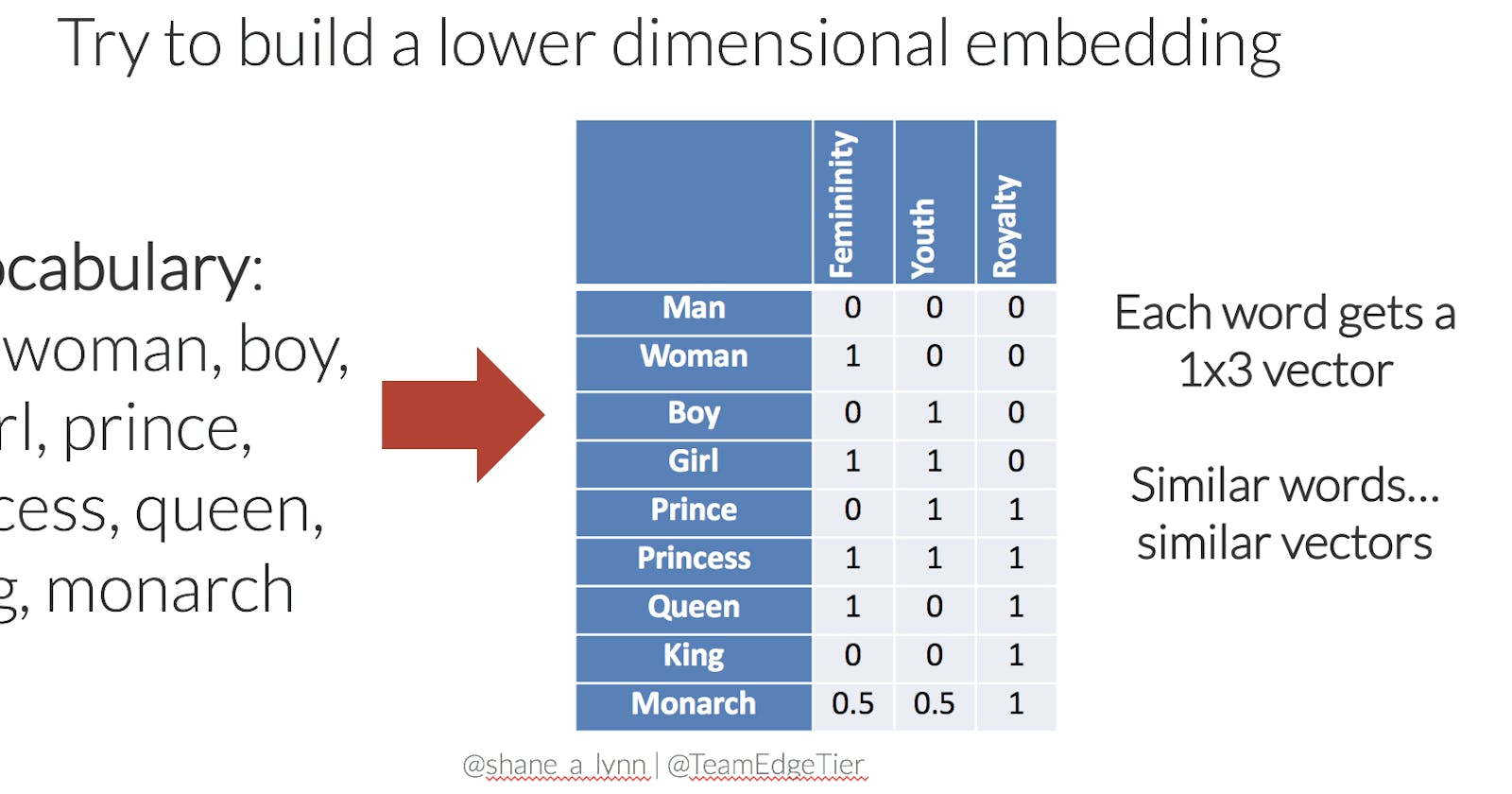

The main idea behind embeddings is to capture the semantic meaning or relationships between data elements, making it easier for models to identify patterns, similarities, and relationships in the data.

For instance, word embeddings represent words as vectors in a high-dimensional space, where similar words or words with related meanings have similar vector representations, i.e., they are close to each other in the vector space.

Embeddings for Dummies

To explain embeddings to a layman, let's use a simple analogy. Imagine you have a collection of fruits, and you want to represent them in a way that captures their similarities and differences. One way to do this is to describe each fruit based on some features, such as colour, shape, and taste.

Suppose we choose three features: sweetness, sourness, and size. We can assign a number to each of these features, creating a 3-dimensional vector for each fruit. For example:

Apple:

[4, 2, 3](medium sweetness, a bit sour, and medium-sized)Orange:

[3, 3, 2](medium sweetness, medium sourness, and small-sized)Watermelon:

[5, 1, 8](very sweet, a bit sour, and large-sized)

These vectors are the "embeddings" for the fruits. When we plot them in a 3D space, we can see that similar fruits (e.g., apple and orange) are closer to each other, while dissimilar ones (e.g., apple and watermelon) are further apart.

In the context of text data, embeddings work similarly, but the dimensions are usually much larger (e.g., 100, 300, or even more). By converting words or phrases into numerical vectors, machine learning algorithms can more easily understand the relationships between them, such as their semantic meanings, similarity, or context.

In the example above we, used just 3-dimensional vector but machine learning models deal with very high dimensionality.

Dimensionality of OpenAI Models

OpenAI's GPT models, including GPT-4, use word embeddings as a part of their architecture. The specific dimensionality of these embeddings depends on the configuration of the model. For example, GPT-3 has different configurations (e.g., base, large, xl, and xxl), and each of these configurations has a different number of dimensions for its embeddings.

In GPT-3, the dimensionality of the embeddings is determined by the hidden size parameter (n_embd). For example, in the "large" configuration, the hidden size is set to 1280, while in the "xl" configuration, it is set to 2560. These numbers indicate the dimensions of the embeddings used in the model.

Popular vectorization libraries

There are several popular methods to generate embeddings, such as Word2Vec, GloVe, and FastText for words, and Doc2Vec for documents. These techniques use unsupervised learning algorithms to create embeddings based on co-occurrence statistics, neural networks, or other approaches.

In addition to NLP, embeddings can also be used in other domains, such as collaborative filtering for recommendation systems, where user and item embeddings can help capture preferences and similarities.

Show some code!

To generate word embeddings in Python, you can use popular libraries like Gensim or spaCy.

Gensim with Word2Vec

First, you need to install Gensim:

$ pip install gensim

Then, you can use the following code to train Word2Vec embeddings

pythonCopy codeimport gensim.downloader as api

from gensim.models import Word2Vec

# Load a dataset for training

dataset = api.load('text8')

# Train a Word2Vec model

model = Word2Vec(sentences=dataset, vector_size=100, window=5, min_count=1, workers=4, epochs=5)

# Get the embedding for a word

word = 'computer'

embedding = model.wv[word]

print(f"Embedding for '{word}': {embedding}")

The equation "king - man + woman" is a popular example of a word analogy task often used to demonstrate the power of word embeddings, such as Word2Vec. The idea is to find a word that relates to "woman" in the same way as "king" relates to "man." When using pre-trained word embeddings, the result of this equation is often "queen," illustrating that word embeddings can capture semantic relationships between words.

Here's an example using the Gensim library and pre-trained word embeddings:

import gensim.downloader as api

# Load pre-trained word embeddings

model = api.load('glove-wiki-gigaword-100')

# Perform the analogy task: king - man + woman = ?

result = model.most_similar(positive=['king', 'woman'], negative=['man'], topn=1)

print(result)

This code will output:

[('queen', 0.7698541283607483)]

The result suggests that "queen" is the closest word to the vector resulting from the equation "king - man + woman." The number 0.7698541283607483 represents the similarity score (cosine similarity) between the resulting vector and the vector for "queen."

Vector Search Engines

Vector search engines or similarity search engines are designed to efficiently store, manage, and search for high-dimensional vectors, like the ones generated by embeddings. These databases are optimized for nearest neighbour search, which helps find the most similar vectors to a given query vector. Some popular vector search engines include:

FAISS (Facebook AI Similarity Search): A library developed by Facebook Research that provides efficient similarity search and clustering of dense vectors. It is written in C++ and has Python bindings. GitHub: github.com/facebookresearch/faiss

Annoy (Approximate Nearest Neighbors Oh Yeah): A C++ library with Python bindings developed by Spotify, optimized for memory usage and loading speed. It is particularly useful for large-scale nearest-neighbour searches. GitHub: github.com/spotify/annoy

HNSW (Hierarchical Navigable Small World): A graph-based algorithm for approximate nearest neighbour search. It is implemented in several libraries, such as the Python library

hnswliband the C++ libraryhnsw. GitHub (hnswlib): github.com/nmslib/hnswlibNMSLIB (Non-Metric Space Library): A C++ library with Python bindings for similarity search in generic non-metric spaces, supporting various similarity search algorithms, including HNSW. GitHub: github.com/nmslib/nmslib

Milvus: An open-source vector database built on top of FAISS, NMSLIB, and other similarity search libraries. It provides a more comprehensive solution for managing and searching large-scale vector data, with support for both approximate and exact nearest-neighbor searches. GitHub: github.com/milvus-io/milvus

Chroma: is the open-source embedding database. Chroma makes it easy to build LLM apps by making knowledge, facts, and skills pluggable for LLMs.

https://github.com/chroma-core/chromaVertex AI Matching Engine: by Google is a vector database that leverages the unique characteristics of embedding vectors to efficiently index them, for easy and scalable search and retrieval of similar embeddings.

Vector Plugins and extensions

Elasticsearch: While primarily a text-based search engine, Elasticsearch also supports vector search through its dense_vector data type and a variety of similarity search functions, such as cosine similarity and Euclidean distance. Dense Vectors

Postgres/Pgvector: It's a Postgres extension which provides vector similarity search for Postgres. https://github.com/pgvector/pgvector/

We have covered a lot of ground in this article. This should take some time to digest. But at the same time, this article introduces some new concepts for us to explore. How to actually calculate the similarity? What is the distance between vectors? How do we represent vectors?...and much more. We would cover them one by one in upcoming articles.